So, you have a Kubernetes cluster up and running. The control plane is live, the worker nodes are ready to handle the load. Shall we go ahead and deploy our business apps? Well, before doing that, we might want to prepare the ground a little bit. As any self respected business, we want to be able to monitor the apps that we deploy, have backups and auto scaling in place and be able to search through the logs if something goes wrong. These are just a few requirements that we’d like to solve, before actually deploying the apps.

Fortunately, there are plenty of tools that solve these infrastructure requirements. Furthermore, most of these infra apps have an associated helm chart. These are just a few examples:

- prometheus/grafana for monitoring

- fluent-bit for logging

- velero for backups

- nginx ingress controller, for load balancing

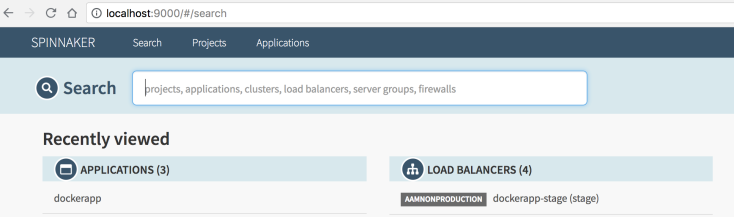

- spinnaker for CI/CD

- external-dns for syncing k8s services with the DNS provider

- cluster autoscaler

and so on…

The question is: how do we deploy them in the Kubernetes cluster? And if more Kubernetes clusters are being spawned up, how can we install these infra apps in a reliable, maintainable and automated fashion?

We explored a few ways we could do it:

1. Having an umbrella helm chart

2. Using Terraform helm provider

3. Using the helmfile command line interface

In this post we’re going to explore helmfile, which we’ve been using successfully for our infra apps deployment.

Helmfile

As someone mentioned, helmfile is like a helm for your helm! It allows us to deploy helm charts, just as we’ll see in the sections below.

A basic helmfile structure

What we’ll see below is a structure inspired from Cloud Posse’s GitHub repo. We have a main helmfile, which contains a list of releases (helm charts) that we want to deploy.

helmfile.yaml

--- # Ordered list of releases. helmfiles: - "commons/repos.yaml" - "releases/nginx-ingress.yaml" - "releases/kube2iam.yaml" - "releases/cluster-autoscaler.yaml" - "releases/dashboard.yaml" - "releases/external-dns.yaml" - "releases/kube-state-metrics.yaml" - "releases/prometheus.yaml" - "releases/thanos.yaml" - "releases/fluent_bit.yaml" - "releases/spinnaker.yaml" - "releases/velero.yaml"

Each release file (which is basically a sub-helmfile), looks something like this:

releases/ngins-ingress.yaml

---

bases:

- ../commons/environments.yaml

---

releases:

- name: "nginx-ingress"

namespace: "nginx-ingress"

labels:

chart: "nginx-ingress"

repo: "stable"

component: "balancing"

chart: "stable/nginx-ingress"

version: {{ .Environment.Values.helm.nginx_ingress.version }}

wait: true

installed: {{ .Environment.Values.helm | getOrNil "nginx_ingress.enabled" | default true }}

- name: "controller.metrics.enabled"

value: {{ .Environment.Values.helm.nginx_ingress.metrics_enabled }}

If you look above, you will see that we are templatizing the helm deployment for the nginx-ingress chart. The actual values (eg. chart version, metrics enabled) are coming from an environment file:

commons/environments.yaml

environments:

default:

values:

- ../auto-generated.yaml

Where auto-generated.yaml is a simple yaml file containing the values:

helm: nginx_ingress: installed: true version: 1.17.1 metrics_enabled: true

velero:

installed: true

s3_bucket: my-s3-bucket

...

Note that in the example above we are using a single env (named default), but you could have more (eg. dev/stage/prod) and switch between them. Each environment would have their own set of values, allowing you to customize the deployment even further, as we’ll see in the section below.

With this in place, we can start deploying the helm charts in our Kubernetes cluster:

$ helmfile sync

This will start deploying the infra apps in the Kubernetes cluster. As an added bonus, the charts will be installed in the order provided in the main helmfile. This is useful if you have a chart that depends on another one to be installed first (eg. external-dns after kube2iam).

Unleashing helmfile’s templatization power

One of the most interesting helmfile features is the ability to use templatization for the helm chart values (a feature that helm lacks). Everything you see in the helmfile can be templatized. Let’s take the cluster-autoscaler as an example. Suppose we want to deploy this chart in two Kubernetes clusters: one that’s located in AWS and one that is in Azure. Because the cluster autoscaler hooks to the cloud APIs, we’ll need to customize the chart values depending on the cloud provider. For instance, AWS requires an IAM role, while Azure needs an azureClientId/azureClientSecret. Let’s see how can implement this behavior with helmfile.

Note that you can also find the below example on the GitHub helmfile-examples repo.

cluster-autoscaler.yaml

---

bases:

- envs/environments.yaml

---

releases:

- name: "cluster-autoscaler"

namespace: "cluster-autoscaler"

labels:

chart: "cluster-autoscaler"

repo: "stable"

component: "autoscaler"

chart: "stable/cluster-autoscaler"

version: {{ .Environment.Values.helm.autoscaler.version }}

wait: true

installed: {{ .Environment.Values | getOrNil "autoscaler.enabled" | default true }}

set:

- name: rbac.create

value: true

{{ if eq .Environment.Values.helm.autoscaler.cloud "aws" }}

- name: "cloudProvider"

value: "aws"

- name: "autoDiscovery.clusterName"

value: {{ .Environment.Values.helm.autoscaler.clusterName }}

- name: awsRegion

value: {{ .Environment.Values.helm.autoscaler.aws.region }}

- name: "podAnnotations.iam\\.amazonaws\\.com\\/role"

value: {{ .Environment.Values.helm.autoscaler.aws.arn }}

{{ else if eq .Environment.Values.helm.autoscaler.cloud "azure" }}

- name: "cloudProvider"

value: "azure"

- name: azureClientID

value: {{ .Environment.Values.helm.autoscaler.azure.clientId }}

- name: azureClientSecret

value: {{ .Environment.Values.helm.autoscaler.azure.clientSecret }}

{{ end }}

As you can see above, we are templatizing the helm deployment for the cluster-autoscaler chart. Based on the selected environment, we will end up with AWS or Azure specific values.

envs/environments.yaml

environments:

aws:

values:

- aws-env.yaml

azure:

values:

- azure-env.yaml

env/aws-env.yaml

helm:

autoscaler:

cloud: aws

version: 0.14.2

clusterName: experiments-k8s-cluster

aws:

arn: arn:aws:iam::00000000000:role/experiments-k8s-cluster-autoscaler

region: us-east-1

env/azure-env.yaml

helm:

autoscaler:

cloud: azure

version: 0.14.2

azure:

clientId: "secret-value-here"

clientSecret: "secret-value-here"

And now, to select the desired cloud provider we can run:

helmfile --environment aws sync # or helmfile --environment azure sync

Templatize the entire values file

You can go even further and templatize the whole values file, by using a .gotmpl file.

releases:

- name: "velero"

chart: "stable/velero"

values:

- velero-values.yaml.gotmpl

You can see an example on the GitHub helmfile-examples repo.

Using templatization for the repos file

We can define a list of helm repos from which to fetch the helm charts. The stable repository is an obvious choice, but we can also add private helm repositories. The cool thing is that we can use templatization in order to provide the credentials.

commons/repos.yaml

---

repositories:

# Stable repo of official helm charts

- name: "stable"

url: "https://kubernetes-charts.storage.googleapis.com"

# Incubator repo for helm charts

- name: "incubator"

url: "https://kubernetes-charts-incubator.storage.googleapis.com"

# Private repository, with credentials coming from the env file

- name: "my-private-helm-repo"

url: "https://my-repo.com"

username: {{ .Environment.Values.artifactory.username }}

password: {{ .Environment.Values.artifactory.password }}

In order to protect your credentials, helmfile supports injecting environment secret values. For more details you can go here.

Tilerless

One thing that was bugging us regarding helm was the fact that it required the Tiller daemon to be installed inside the Kubernetes cluster (prior to doing any helm chart deployments). Just to refresh your mind, helm has two parts: a client (helm) and a server (tiller). Besides the security concerns, there was also added complexity in order to setup the role, service account and doing the actual Tiller installation.

The good news is that helmfile can be setup to run in a tilerless mode. When this is enabled, helmfile will use the helm-tiller plugin, which will basically run Tiller locally. No need to install the tiller daemon inside the Kubernetes cluster anymore. If you’re wondering what happens when multiple people try to install charts using this Tilerless approach, then you should worry not because the helm state information is still being stored in Kubernetes, as secrets in the selected namespace.

helmDefaults: tillerNamespace: helm tillerless: true

When running in tillerless mode, you can list the installed charts this way:

helm tiller run helm -- helm list

If you want to find out more about the plugin, here’s a pretty cool article from the main developer. We’re also looking forward for the helm3 release, which will get rid of Tiller altogether.

Conclusion

Helmfile does its job and it does it well. It has a strong community support, and the templatization power is just wow. If you haven’t already, go ahead and give it a spin: https://github.com/roboll/helmfile